Proctoring is an effective way to deter dishonest behavior. There’s no doubt about it: Research shows that 70% of students cheat during unmonitored exams but only 15% do so during monitored exams. Why is there such a big discrepancy? One factor is the fear of getting caught. According to a survey by Wiley, about 73% of students claim they’re less likely to cheat if there’s a risk of detection.

Despite the proven effectiveness of proctoring, methods for doing so vary widely—and have varying degrees of success. Recent data suggests that while some proctoring methods may appear effective on the surface, they leave significant gaps in coverage that could compromise the integrity of the testing process.

Comparison of Different Proctoring Methods

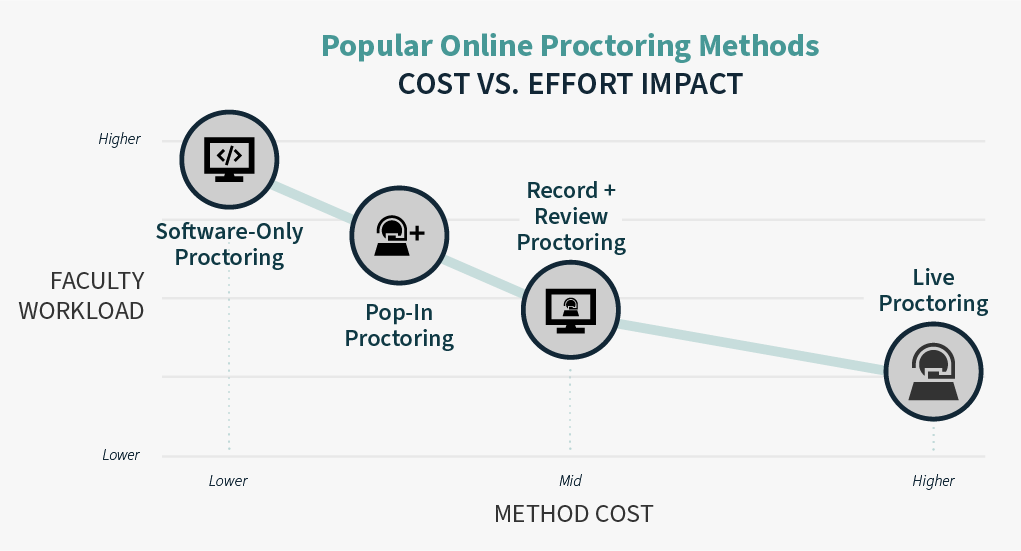

To contextualize the recent data, we need to start by outlining the proctoring methods that institutions commonly use. Each option offers its own set of benefits—but also presents certain challenges and limitations.

- Fully Automated Proctoring: Software-only proctoring systems use algorithms and artificial intelligence to monitor students during exams. These low-cost systems are designed to flag suspicious behaviors, but they’re ineffective without a human taking the time to review and interpret session activity.

- Pop-In Proctoring: This method involves a proctor “popping in” to monitor students for unspecified periods during an automated exam. It’s marketed as a low-cost solution that combines automation with occasional human oversight and review, but there’s uncertainty around how proctors choose which sessions to pop in to and how long they are present.

- Record and Review Proctoring: In this approach, automated exams are recorded and reviewed in full by a proctor after the fact. This middle-ground method allows for a thorough review of flagged behaviors without continuous live observation—and without placing the burden of review on faculty and staff.

- Live Proctoring: The most hands-on approach, live proctoring involves a human proctor monitoring students in real time throughout the entire exam. This method provides the highest level of security and student support, but it also comes at a higher price point.

Institutional decision-makers often choose automated or pop-in solutions for their perceived benefits. On paper, they look cost-effective, scalable, and less labor-intensive. But in reality, they’re the opposite. Many institutions don’t realize that these methods leave large portions of an exam unchecked—and many sessions are unmonitored entirely. This allows academic dishonesty to go undetected. In the long run, efforts to address the consequences of this oversight can ultimately negate the initial savings.

The Risks of Fully Automated and Pop-In Proctoring Methods

What Goes Unnoticed During Gaps in Observation?

At face value, low-cost solutions—like pop-in proctoring—are attractive for their affordability and ease of implementation. But the gaps in observation and lack of human review can have serious implications.

Consider the following statistic from our proprietary research: Pop-in proctors only “pop in” to about 2.5% of all exams. There’s also no clear data on how long the proctors remain in these sessions. This means students are not monitored by a human proctor during 97.5% of exams. Even in the sessions where proctors do “pop in,” the effectiveness remains questionable given their uncertain duration. With such minimal observation, how can you trust that academic integrity is being upheld? And when so few of your exams are monitored (for an unknown amount of time), what does that say about the validity of your program?

During these sessions, critical moments of potential cheating could easily go unnoticed—like the use of a cell phone placed just outside the camera’s view, unpermitted notes taped to a keyboard, or unauthorized assistance from someone entering and exiting the room off-screen. These incidents may seem rare or unlikely, but they’re very concerning when you consider that up to 70% of students admit to cheating.

Let’s think about this another way. Imagine you’re administering an in-person exam, but you only step into the room for 5 minutes. What happens during the 55 minutes you aren’t there? Even if you have a video recording of the room, you’d have to go back and review it to get a complete picture of what occurred. And we know that reviewing session footage can be incredibly time-consuming. On average, it takes 47 minutes to review a midterm or final higher-ed exam. For a batch of 100 exams, that adds up to 78 hours! The equivalent of driving from Los Angeles to New York and back again. We don’t believe instructors can or should handle this burden.

Can You Trust AI to Catch What Pop-In Proctoring Misses?

AI use in proctoring is still in its infancy, and while it shows promise in detecting suspicious activity, it’s far from foolproof. Our research on the pop-in proctoring method reveals that about 50% of fully automated proctoring sessions are flagged by AI for suspicious activity. However, only 10% of these flagged sessions are reviewed by a human proctor after they’ve ended. This means 90% of potentially problematic exams are left unchecked by a trained professional. If no one is reviewing the footage, how can we trust that AI is accurately identifying all cheating behaviors?

To put this in perspective, let’s return to our previous hypothetical. Say you have 100 exams being proctored via the pop-in method. If 50% are flagged by automation, that’s 50 exams. But if only 10% of these flagged sessions are reviewed by a human, you’re left with just 5 exams being further investigated. Combine this with the fact that only 2.5% of sessions are “popped in” to by a proctor, and the gaps become glaringly obvious. The result? A huge portion of your exams are inadequately monitored, allowing academic dishonesty to slip through the cracks.

The Institutional Perils of Relying on Pop-In Proctoring

Sadly, institutions that rely on fully automated systems—or assume that occasional pop-ins by proctors provide enough assurance—risk overlooking potential instances of cheating or misjudging innocent actions as suspicious. This overreliance can create a false sense of security among administrators, faculty, staff, and students.

We’re not trying to demonize these methods. They have very legitimate use cases and can be used effectively. But the key distinction is this: If every minute of every proctored session isn’t monitored in real time or retroactively by an actual human, the integrity of the testing process is at risk. And our data shows that between 90% and 97.5% of all exams proctored via these methods are not monitored by a human. This means institutions may be unwittingly undermining the credibility of their own exams.

The integrity of your program is extremely important, and the stakes are at an all-time high. If a cheating scandal emerged, how would your institution’s reputation, accreditation, and enrollment be impacted? How would it impact your students’ trust in the value of their degrees? And how much money would you have to dole out to address the situation?

Weighing the True Cost of Pop-In Proctoring

Pop-in proctoring solutions might seem like an efficient way to manage online exams, but the risks they pose to academic integrity can be serious. It begs the question: Are the short-term savings of a cheaper proctoring solution worth the long-term costs of unchecked cheating?

To learn more about maintaining exam integrity, check out our article 8 No-Cost Ways to Mitigate Academic Misconduct in Higher Education.